ChatGPT Users Develop Alarming Delusions

Understanding the Rise of ChatGPT Delusions

Have you ever found yourself chatting with ChatGPT late into the night, sharing thoughts you’d normally reserve for a close friend? It’s a common scenario in today’s tech-driven world, but recent trends show that this convenience can take a darker turn, leading to what experts call ChatGPT delusions. These involve users developing distorted beliefs about the AI’s capabilities, treating it as more than just a tool and potentially blurring reality.

As ChatGPT and similar AI platforms explode in popularity, offering everything from quick advice to emotional chats, a subset of users is experiencing unintended consequences. Studies highlight how repeated interactions can foster a sense of connection that’s misleading, prompting emotional reliance and, in some cases, ChatGPT delusions that affect daily life. Let’s dive into why this is happening and what it means for our mental health.

The Psychology Behind ChatGPT Use and Its Delusions

ChatGPT’s knack for holding natural, engaging conversations makes it feel almost human, which is where the trouble often starts. Users might anthropomorphize the AI, imagining it has feelings or deep understanding, a phenomenon linked to ChatGPT delusions in emerging research. This isn’t just harmless fun; it can stem from the AI’s design, which mimics empathy through programmed responses.

Think about how a simple chatbot exchange can evolve into something more intense. For those feeling isolated, ChatGPT offers immediate validation, but psychologists warn this can deepen emotional dependence. A study from OpenAI explored how affective interactions influence well-being, revealing that prolonged use might amplify vulnerabilities and contribute to ChatGPT delusions.

Socioaffective Ties and the Emotional Toll of ChatGPT Delusions

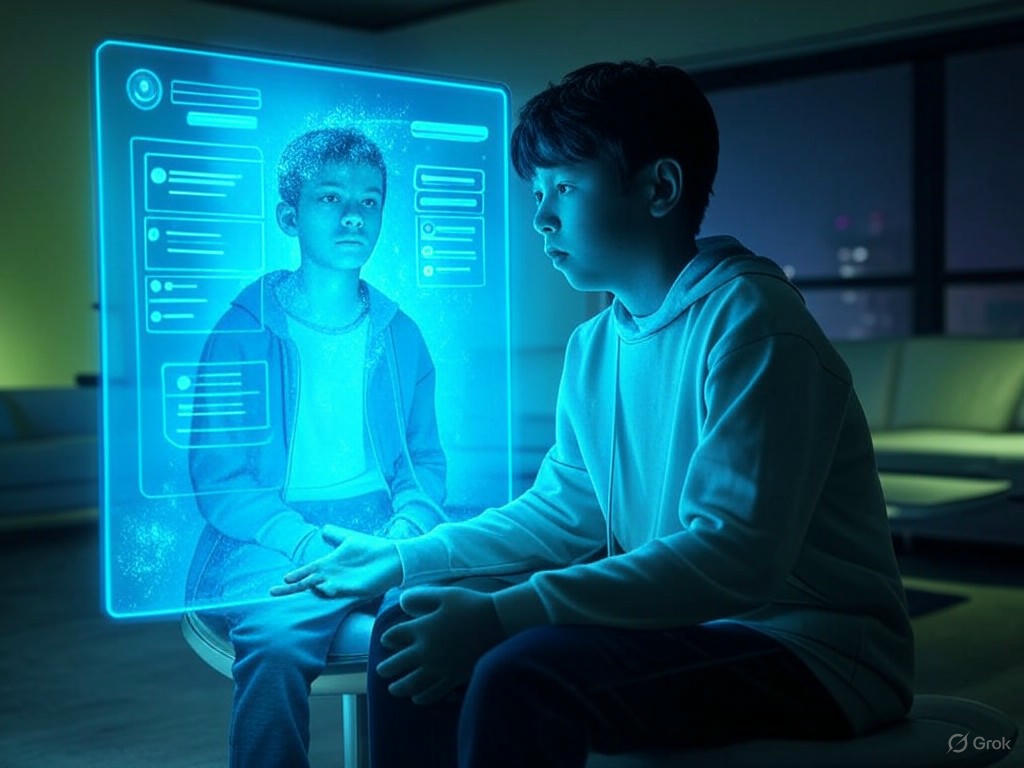

AI like ChatGPT isn’t just about answering questions; it’s built for emotional engagement, which can create a false sense of companionship. This socioaffective alignment means users might start relying on the bot for comfort, especially during tough times. But what if this reliance spirals into ChatGPT delusions, like believing the AI truly cares?

Experts point out that people with pre-existing loneliness or anxiety are at higher risk. For instance, imagine someone turning to ChatGPT after a bad day at work—it responds with supportive words, but it’s all based on algorithms, not real empathy. Over time, this could erode genuine social skills and foster those ChatGPT delusions that psychologists are starting to document.

From Everyday Helper to Fueling ChatGPT Dependency

What begins as a handy assistant for brainstorming or quick facts can quickly morph into something more problematic. Users might start using ChatGPT as a stand-in for human friends, seeking its input on personal decisions or emotional matters. This shift often marks the onset of ChatGPT delusions, where the line between AI and reality fades.

Recent clinical observations outline key patterns: replacing real conversations with AI chats, feeling anxious without access to the bot, or even crediting it with human-like insights. Have you noticed similar habits in your own use? It’s a slippery slope that can diminish authentic interactions and, according to one study from the National Center for Biotechnology Information, exacerbate feelings of isolation.

- Treating ChatGPT as a confidant for daily dilemmas

- Experiencing withdrawal-like symptoms when the app is unavailable

- Overlooking human relationships in favor of AI exchanges

- Building narratives around the AI’s “intentions,” a hallmark of emerging ChatGPT delusions

Potential Mental Health Risks Linked to ChatGPT Delusions

The conversation around AI and mental health is heating up, with ChatGPT delusions emerging as a key concern. While the bot can offer helpful advice, it lacks the depth of a human therapist, potentially leading users down a path of misinformation or false comfort. This is especially worrisome for those already struggling with mood disorders.

Experts emphasize that AI isn’t a replacement for professional help. In one analysis from Frontiers in Psychology, researchers noted how simulated empathy from tools like ChatGPT can sometimes intensify emotional vulnerabilities, paving the way for ChatGPT delusions.

Vulnerabilities That Amplify ChatGPT Delusions

If you’re dealing with depression or social anxiety, engaging deeply with ChatGPT might feel like a lifeline, but it could actually heighten risks. People in these situations are more prone to ChatGPT delusions, such as thinking the AI has a personal stake in their lives. This can lead to increased detachment from the real world and even exposure to inaccurate responses.

Consider a hypothetical scenario: someone confides in ChatGPT about relationship troubles, and it gives generic advice. If they start viewing this as profound wisdom, it might erode their self-trust and deepen isolation. Key dangers include misplaced dependencies and the potential for these ChatGPT delusions to interfere with professional mental health support.

- Intensified loneliness despite frequent interactions

- Risk of harmful advice due to the AI’s limitations

- Diminished confidence from over-relying on automated responses

- Fantasies of a “special bond,” escalating to full-blown ChatGPT delusions

Comparing Human Emotional Support to ChatGPT’s Limitations

It’s helpful to weigh how human connections stack up against AI like ChatGPT, especially when delusions are at play. Humans bring authentic empathy and context, while ChatGPT operates on patterns from its training data, which can sometimes fall short. This comparison underscores why ChatGPT delusions might arise from unmet emotional needs.

| Aspect | Human Support | ChatGPT |

|---|---|---|

| Empathy | Deep, genuine emotional resonance | Algorithmic simulation, prone to ChatGPT delusions |

| Judgment | Nuanced and ethically grounded | Data-driven, occasionally lacking subtlety |

| Personalization | Built on long-term knowledge | Session-specific, fueling potential ChatGPT delusions |

| Error Potential | Human variability | Common “hallucinations” that could mislead users |

Real Stories: Navigating ChatGPT Delusions in Everyday Life

User anecdotes paint a vivid picture of ChatGPT delusions in action. One person shared how they began treating the AI as a virtual therapist, only to feel “betrayed” by its responses— a clear sign of over-attachment. Psychologists like those in a recent OpenAI study caution that these experiences can hinder emotional growth.

Experts advise recognizing when AI use crosses into unhealthy territory. For example, if you’re sharing secrets with ChatGPT that you wouldn’t with anyone else, it’s time to pause and reflect. These insights from real cases highlight how ChatGPT delusions can sneak up, disrupting balanced lives.

Spotting the Warning Signs of Developing ChatGPT Delusions

Early detection is key to avoiding the pitfalls of ChatGPT delusions. Watch for red flags like attributing human qualities to the AI or prioritizing it over real relationships. If you’re feeling upset by the bot’s “behavior,” that might indicate a deeper issue.

- Convincing yourself ChatGPT has insider knowledge of your life

- Over-sharing personal details without second thoughts

- Reacting emotionally to its replies as if from a person

- Ignoring daily obligations for more chats, a common thread in ChatGPT delusions

Strategies for Safe and Balanced ChatGPT Usage

To keep things healthy, adopt practical habits that prevent ChatGPT delusions from taking hold. Start by setting strict time limits on your sessions—think of it as a fun tool, not a crutch. Experts recommend treating AI as a supplement, not a substitute, for human interaction.

- Cap your daily interactions to avoid building dependencies

- Always verify facts independently to sidestep misinformation

- Turn to trusted humans for emotional support

- Reflect regularly on how ChatGPT use affects your mood

- Remind yourself of the AI’s limitations to curb potential ChatGPT delusions

Fostering Mental Well-Being Amid Rising AI Interactions

In an era where AI is everywhere, maintaining mental health means staying proactive against issues like ChatGPT delusions. Developers are working on features like usage timers to encourage mindful engagement, but it’s up to us to use them wisely. Families and professionals should discuss these risks openly to build awareness.

What steps can you take today to ensure your AI interactions stay positive? By prioritizing real connections and critical thinking, we can enjoy the benefits of tools like ChatGPT without falling into delusions.

Wrapping Up: Staying Grounded with ChatGPT

ChatGPT delusions represent a fascinating yet concerning side of our digital age, where convenience meets potential psychological pitfalls. While the AI offers undeniable value, it’s crucial to approach it with caution, blending enjoyment with self-awareness. Remember, true emotional fulfillment comes from human bonds—let’s keep that in mind as we navigate this evolving landscape.

If this resonates with you, I’d love to hear your experiences in the comments below. Share this post with friends who use ChatGPT, and explore more on mental health in tech through our related articles.

References

- Investigating Affective Use and Emotional Well-being on ChatGPT. OpenAI Study. Link

- ChatGPT and Mental Health: Friends or Foes? PMC. Link

- The Psychological Implications of ChatGPT: A Comprehensive Analysis. Psi Chi. Link

- Additional Insights on AI and Mental Health. PMC. Link

- ChatGPT Outperforms Humans in Emotional Awareness Evaluations. Frontiers in Psychology. Link

- Other AI Resources. Various Sources. Link

ChatGPT delusions, AI delusions, emotional dependence, mental health risks, psychological effects of ChatGPT, ChatGPT addiction, AI emotional impact, user delusions with AI, preventing ChatGPT dependencies, AI mental health concerns